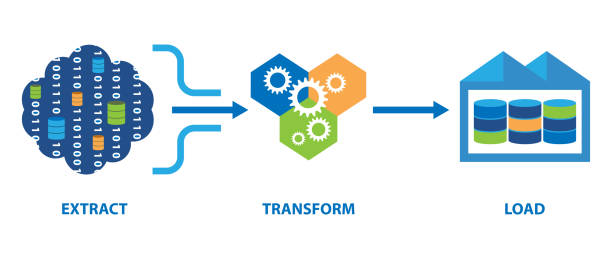

The ETL Process in Data Automation: Extract, Transform, Load for Data Integration and Analysis

The ETL (Extract, Transform, Load) process is a cornerstone of data automation in the world of data management, analytics, and business intelligence. This process is designed to collect, refine, and transport data from a multitude of source systems into a target repository, often a data warehouse or a database, in a standardized and usable format. In this comprehensive discussion, we will delve into the intricacies of each stage of the ETL process, its significance in data automation, common challenges, and best practices.

1. Extract:

The initial phase of ETL, extraction, is where data is sourced from a range of origins. These sources can be diverse, including relational databases, flat files, APIs, log files, web services, and more. The primary goal here is to gather data and bring it into a controlled environment where it can be processed and analyzed. Extraction methods vary and can include:

-

Batch Processing: This is a traditional approach where data is extracted at predefined intervals, such as daily, weekly, or monthly. Batch extraction is often used when real-time data is not necessary or feasible.

-

Real-time Extraction: In scenarios where immediate access to data is essential, real-time or near-real-time extraction is used. This method ensures that changes in source data are immediately reflected in the target system.

-

Change Data Capture (CDC): CDC is a method that identifies and captures changes made to the source data since the last extraction. This is especially useful in real-time and near-real-time ETL scenarios, as it reduces the amount of data transferred.

Once data is extracted, it's essential to perform an initial examination, known as data profiling. Data profiling helps identify the structure of the source data, including data types, formats, and potential issues such as missing values or inconsistencies. This preliminary analysis is crucial for making informed decisions on how to handle the data during the subsequent transformation phase.

2. Transform:

The transformation phase is where the extracted data undergoes a series of operations to ensure that it is clean, consistent, and ready for analysis. This phase involves several key activities:

-

Data Cleaning: Extracted data can be messy, containing errors, missing values, and inconsistencies. Data cleaning involves processes like deduplication, data validation, and data correction to ensure the data's quality and accuracy.

-

Data Structuring: Different source systems often store data in varying formats. Transforming data structures, such as converting date formats or normalizing data, is necessary to make it uniform and compatible with the target system.

-

Data Enrichment: To enhance the value of the data, additional information can be added during the transformation process. This may include appending geographic data, demographic details, or other reference data to provide a richer context for analysis.

-

Aggregation: Aggregation is the process of summarizing data, often used in data warehousing to create pre-computed summary tables, which can significantly improve query performance.

-

Data Validation: Validation rules are applied to ensure that data conforms to predefined standards. If data doesn't meet these standards, it's flagged for further investigation and potential correction.

-

Business Rules and Calculations: In many cases, businesses need to apply specific rules, calculations, or transformations to their data to make it meaningful and relevant for analysis. For instance, calculating net profit, generating sales forecasts, or computing customer lifetime value.

-

Data Integration: Data from various sources may need to be integrated to create a unified and comprehensive dataset. This involves linking data across different systems and reconciling differences in data structures and formats.

3. Load:

The final step in the ETL process is data loading, where transformed and refined data is placed into a target system. The target system can be a data warehouse, a relational database, a data lake, or any other storage solution designed to accommodate the data for analytical purposes. Loading methods can include:

-

Batch Loading: In this approach, data is loaded in bulk, typically in predefined batches, such as daily, weekly, or monthly. Batch loading is suitable for scenarios where real-time data access is not a requirement.

-

Incremental Loading: Incremental loading involves updating only the changes or new data that has occurred since the last load. This approach is efficient when dealing with large datasets and when real-time data access is important.

-

Streaming: In situations where immediate access to data is critical, streaming is employed. This method involves transmitting data in real-time as it is generated, ensuring that it is immediately available for analysis.

Once data is loaded into the target system, additional tasks are performed to optimize its usability:

-

Indexing: Indexes are created to speed up query performance, enabling faster data retrieval.

-

Data Partitioning: Data can be divided into partitions for easier management and improved query performance. For example, time-based partitioning is often used for time-series data.

-

Logging and Monitoring: Comprehensive logging and monitoring mechanisms are established to track the ETL process. This helps identify issues, provides insights into the success of data transfers, and facilitates debugging and analysis.

-

Data Quality Checks: Continuous data quality checks are implemented in the target system to ensure that the loaded data remains accurate and consistent. Any discrepancies or anomalies can trigger alerts for further investigation and correction.

Significance of the ETL Process:

The ETL process plays a pivotal role in data automation for several reasons:

-

Data Integration: It enables organizations to combine data from a multitude of sources, creating a unified dataset that provides a holistic view of the business.

-

Data Consistency: By applying transformations and data quality checks, ETL ensures that the data is consistent, accurate, and adheres to predefined standards.

-

Data Accessibility: ETL makes data readily available for analysis and reporting in a format that is optimized for querying.

-

Data Enrichment: The process allows for the enrichment of data with additional information, providing context and depth for analysis.

-

Data Historical Tracking: ETL processes can capture historical changes in data, making it possible to analyze data over time and understand trends and patterns.

-

Performance Optimization: Techniques like indexing and partitioning in the loading phase improve query performance, ensuring that users can retrieve data quickly.

-

Data Quality Assurance: ETL encompasses data cleaning and validation, reducing the likelihood of erroneous or misleading data being used for analysis or decision-making.

Challenges in the ETL Process:

While the ETL process offers numerous benefits, it also presents some challenges:

-

Data Volume: Dealing with large volumes of data can be resource-intensive and may require sophisticated infrastructure.

-

Data Variety: Sources may provide data in various formats and structures, necessitating complex transformations.

-

Data Velocity: In real-time ETL, handling data as it streams in requires robust and scalable systems.

-

Data Quality: Ensuring data quality throughout the process is essential, as poor-quality data can lead to inaccurate insights and decisions.

-

Data Security: Protecting sensitive data throughout the ETL process is critical to compliance and privacy concerns.

-

Complexity: ETL processes can become quite complex, involving numerous transformations, dependencies, and error handling logic.

-

Latency: Reducing the time it takes to extract, transform, and load data is essential, especially when real-time access is required.

Best Practices in the ETL Process:

To ensure the success of ETL processes in data automation, organizations should follow these best practices:

-

Data Profiling: Conduct thorough data profiling to understand the source data's structure and quality.

-

Data Documentation: Maintain documentation of the ETL processes, including transformations, business rules, and validation checks.

-

Incremental Loading: Implement incremental loading whenever possible to reduce data transfer times and resource consumption.

-

Error Handling: Establish robust error handling mechanisms to address issues in real-time and avoid data corruption.

-

Scalability: Design ETL processes to be scalable, so they can handle growing data volumes and evolving requirements.

-

Data Security: Ensure data security throughout the process, including encryption, access controls, and compliance with data protection regulations.

-

Testing and Validation: Thoroughly test ETL processes and validate the accuracy and consistency of data at each stage.

-

Monitoring and Logging: Continuously monitor the ETL process and log activities to quickly identify and address any issues.

-

Version Control: Use version control systems for ETL code and configurations to manage changes and facilitate collaboration.

-

Data Lineage: Maintain data lineage records to track the flow of data from source to destination, aiding in traceability and auditing.

Conclusion:

The ETL process is the foundation of data automation, serving as the bridge between raw, heterogeneous data from various sources and a structured, unified dataset ready for analysis and reporting. It involves extracting data from source systems, transforming it to ensure quality and consistency, and loading it into a target system. This process is vital for organizations seeking to leverage their data effectively and make informed decisions based on reliable information.

Despite the challenges and complexities associated with ETL, adhering to best practices and leveraging modern technologies can streamline the process and maximize its benefits. ETL automation tools, cloud-based solutions, and advances in data engineering have made it easier than ever to implement efficient ETL processes that deliver accurate and timely data for decision-making and business insights.